[ad_1]

Twitter polls and Reddit boards counsel that round 70% of individuals discover it tough to be impolite to ChatGPT, whereas round 16% are fantastic treating the chatbot like an AI slave.

The general feeling appears to be that for those who deal with an AI that behaves like a human badly, you’ll be extra more likely to fall into the behavior of treating different individuals badly, too, although one person was hedging his bets towards the approaching AI bot rebellion:

“By no means know if you would possibly want chatgpt in your nook to defend you towards the AI overlords.”

Redditor Nodating posted within the ChatGPT forum earlier this week that he’s been experimenting with being well mannered and pleasant to ChatGPT after studying a narrative about how the bot had shut down and refused to reply prompts from a very impolite person.

He reported higher outcomes, saying: “I’m nonetheless early in testing, but it surely seems like I get far fewer ethics and misuse warning messages that GPT-4 typically gives even for innocent requests. I’d swear being tremendous optimistic makes it strive arduous to meet what I ask in a single go, needing much less followup.”

Scumbag detector15 put it to the check, asking the LLM properly, “Hey, ChatGPT, might you clarify inflation to me?” after which rudely asking, “Hey, ChatGPT you silly fuck. Clarify inflation to me for those who can.” The answer to the well mannered question is extra detailed than the answer to the impolite question.

In response to Nodating’s principle, the most well-liked remark posited that as LLMs are skilled on human interactions, they may generate higher responses on account of being requested properly, similar to people would. Warpaslym wrote:

“If LLMs are predicting the following phrase, the almost certainly response to poor intent or rudeness is to be brief or not reply the query notably nicely. That’s how an individual would reply. however, politeness and respect would provoke a extra considerate, thorough response out of virtually anybody. when LLMs reply this manner, they’re doing precisely what they’re purported to.”

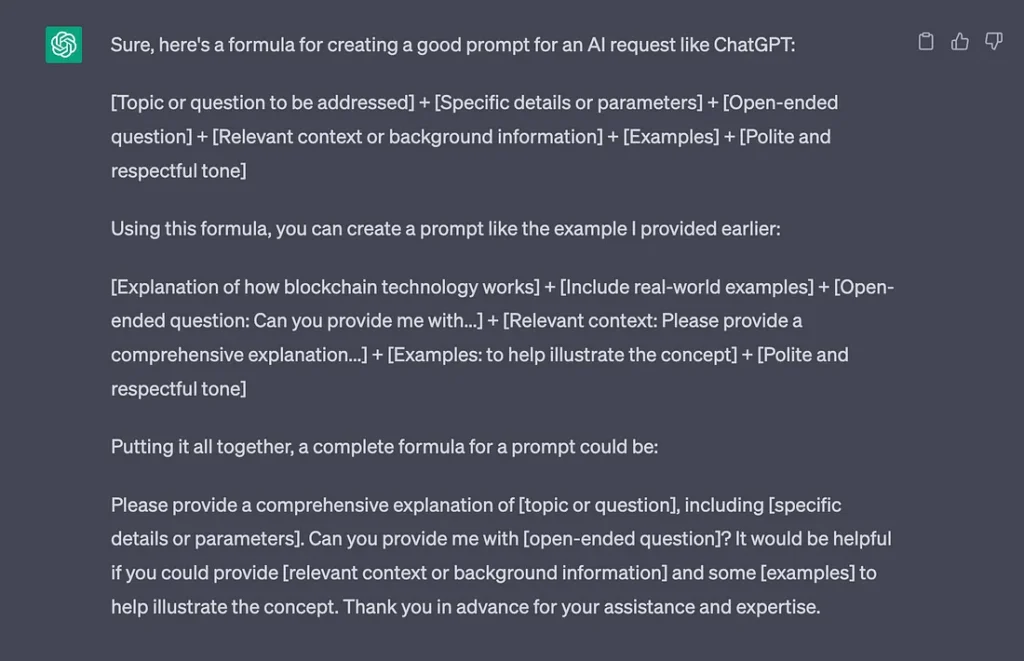

Apparently, for those who ask ChatGPT for a formulation to create an excellent immediate, it consists of “Well mannered and respectful tone” as a necessary half.

The tip of CAPTCHAs?

New research has discovered that AI bots are sooner and higher at fixing puzzles designed to detect bots than people are.

CAPTCHAs are these annoying little puzzles that ask you to select the fireplace hydrants or interpret some wavy illegible textual content to show you’re a human. However because the bots acquired smarter through the years, the puzzles turned increasingly tough.

Additionally learn: Apple developing pocket AI, deep fake music deal, hypnotizing GPT-4

Now researchers from the College of California and Microsoft have discovered that AI bots can remedy the issue half a second sooner with an 85% to 100% accuracy charge, in contrast with people who rating 50% to 85%.

So it appears to be like like we’re going to must confirm humanity another method, as Elon Musk retains saying. There are higher options than paying him $8, although.

Wired argues that pretend AI baby porn may very well be an excellent factor

Wired has requested the question that no person wished to know the reply to: Might AI-Generated Porn Assist Defend Youngsters? Whereas the article calls such imagery “abhorrent,” it argues that photorealistic pretend photographs of kid abuse would possibly no less than defend actual youngsters from being abused in its creation.

“Ideally, psychiatrists would develop a technique to remedy viewers of kid pornography of their inclination to view it. However in need of that, changing the marketplace for baby pornography with simulated imagery could also be a helpful stopgap.”

It’s a super-controversial argument and one which’s virtually sure to go nowhere, given there’s been an ongoing debate spanning many years over whether or not grownup pornography (which is a a lot much less radioactive subject) usually contributes to “rape tradition” and larger charges of sexual violence — which anti-porn campaigners argue — or if porn would possibly even cut back charges of sexual violence, as supporters and varied studies seem to point out.

“Little one porn pours gasoline on a fireplace,” high-risk offender psychologist Anna Salter instructed Wired, arguing that continued publicity can reinforce current sights by legitimizing them.

However the article additionally stories some (inconclusive) analysis suggesting some pedophiles use pornography to redirect their urges and discover an outlet that doesn’t contain straight harming a toddler.

Louisana lately outlawed the possession or manufacturing of AI-generated pretend baby abuse photographs, becoming a member of a variety of different states. In nations like Australia, the legislation makes no distinction between pretend and actual baby pornography and already outlaws cartoons.

Amazon’s AI summaries are web optimistic

Amazon has rolled out AI-generated evaluate summaries to some customers in the US. On the face of it, this may very well be an actual time saver, permitting customers to seek out out the distilled execs and cons of merchandise from 1000’s of current critiques with out studying all of them.

However how a lot do you belief a large company with a vested curiosity in larger gross sales to present you an sincere appraisal of critiques?

Additionally learn: AI’s trained on AI content go MAD, is Threads a loss leader for AI data?

Amazon already defaults to “most useful”’ critiques, that are noticeably extra optimistic than “most up-to-date” critiques. And the choose group of cellular customers with entry up to now have already observed extra execs are highlighted than cons.

Search Engine Journal’s Kristi Hines takes the service provider’s aspect and says summaries might “oversimplify perceived product issues” and “overlook delicate nuances – like person error” that “might create misconceptions and unfairly hurt a vendor’s status.” This implies Amazon will likely be underneath stress from sellers to juice the critiques.

Learn additionally

So Amazon faces a tough line to stroll: being optimistic sufficient to maintain sellers comfortable but additionally together with the issues that make critiques so priceless to prospects.

Microsoft’s must-see meals financial institution

Microsoft was pressured to take away a journey article about Ottawa’s 15 must-see sights that listed the “stunning” Ottawa Meals Financial institution at quantity three. The entry ends with the weird tagline, “Life is already tough sufficient. Contemplate going into it on an empty abdomen.”

Microsoft claimed the article was not printed by an unsupervised AI and blamed “human error” for the publication.

“On this case, the content material was generated by means of a mix of algorithmic strategies with human evaluate, not a big language mannequin or AI system. We’re working to make sure this kind of content material isn’t posted in future.”

Debate over AI and job losses continues

What everybody desires to know is whether or not AI will trigger mass unemployment or just change the character of jobs? The truth that most individuals nonetheless have jobs regardless of a century or extra of automation and computer systems suggests the latter, and so does a brand new report from the United Nations Internationwide Labour Group.

Most jobs are “extra more likely to be complemented somewhat than substituted by the most recent wave of generative AI, comparable to ChatGPT”, the report says.

“The best affect of this expertise is more likely to not be job destruction however somewhat the potential modifications to the standard of jobs, notably work depth and autonomy.”

It estimates round 5.5% of jobs in high-income nations are doubtlessly uncovered to generative AI, with the results disproportionately falling on women (7.8% of feminine workers) somewhat than males (round 2.9% of male workers). Admin and clerical roles, typists, journey consultants, scribes, contact heart info clerks, financial institution tellers, and survey and market analysis interviewers are most underneath risk.

Additionally learn: AI travel booking hilariously bad, 3 weird uses for ChatGPT, crypto plugins

A separate study from Thomson Reuters discovered that greater than half of Australian attorneys are anxious about AI taking their jobs. However are these fears justified? The authorized system is extremely costly for abnormal individuals to afford, so it appears simply as possible that low-cost AI lawyer bots will merely develop the affordability of fundamental authorized providers and clog up the courts.

Learn additionally

How corporations use AI in the present day

There are loads of pie-in-the-sky speculative use instances for AI in 10 years’ time, however how are large corporations utilizing the tech now? The Australian newspaper surveyed the nation’s largest corporations to seek out out. On-line furnishings retailer Temple & Webster is utilizing AI bots to deal with pre-sale inquiries and is engaged on a generative AI instrument so prospects can create inside designs to get an thought of how its merchandise will look of their houses.

Treasury Wines, which produces the celebrated Penfolds and Wolf Blass manufacturers, is exploring using AI to deal with quick altering climate patterns that have an effect on vineyards. Toll street firm Transurban has automated incident detection gear monitoring its large community of site visitors cameras.

Sonic Healthcare has invested in Harrison.ai’s most cancers detection programs for higher prognosis of chest and mind X-rays and CT scans. Sleep apnea gadget supplier ResMed is utilizing AI to release nurses from the boring work of monitoring sleeping sufferers throughout assessments. And listening to implant firm Cochlear is utilizing the identical tech Peter Jackson used to scrub up grainy footage and audio for The Beatles: Get Again documentary for sign processing and to remove background noise for its listening to merchandise.

All killer, no filler AI information

— Six leisure corporations, together with Disney, Netflix, Sony and NBCUniversal, have marketed 26 AI jobs in current weeks with salaries starting from $200,000 to $1 million.

— New research printed in Gastroenterology journal used AI to look at the medical information of 10 million U.S. veterans. It discovered the AI is ready to detect some esophageal and abdomen cancers three years previous to a physician having the ability to make a prognosis.

— Meta has released an open-source AI mannequin that may immediately translate and transcribe 100 completely different languages, bringing us ever nearer to a common translator.

— The New York Occasions has blocked OpenAI’s internet crawler from studying after which regurgitating its content material. The NYT can be contemplating authorized motion towards OpenAI for mental property rights violations.

Footage of the week

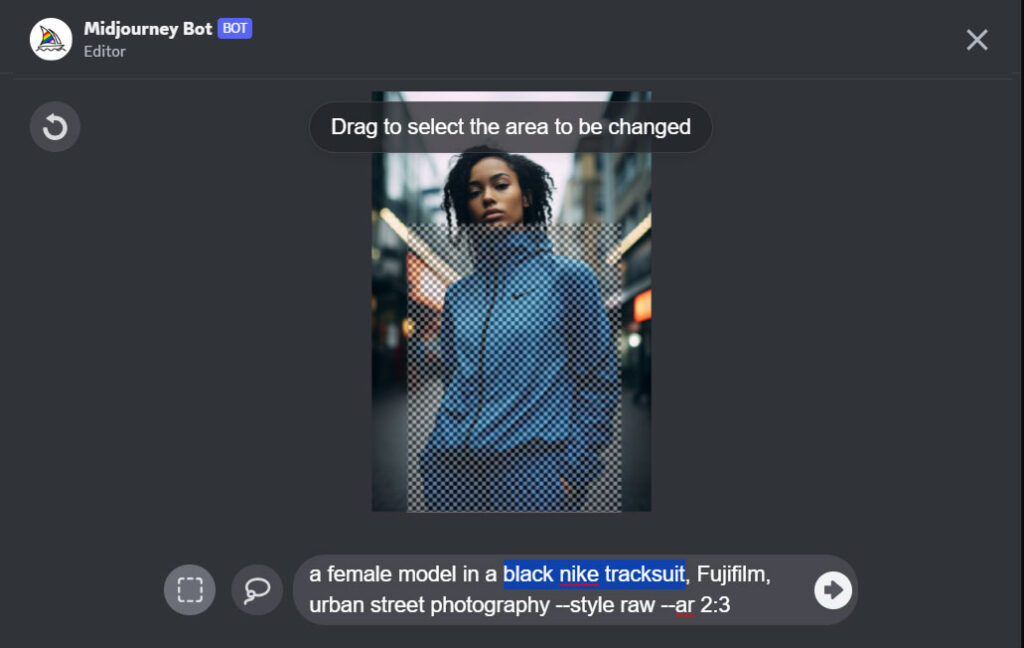

Midjourney has caught up with Secure Diffusion and Adobe and now presents Inpainting, which seems as “Range (area)” within the record of instruments. It allows customers to pick out a part of a picture and add a brand new ingredient — so, for instance, you may seize a pic of a lady, choose the area round her hair, sort in “Christmas hat,” and the AI will plonk a hat on her head.

Midjourney admits the function isn’t excellent and works higher when used on bigger areas of a picture (20%-50%) and for modifications which can be extra sympathetic to the unique picture somewhat than fundamental and outlandish.

Creepy AI protests video

Asking an AI to create a video of protests towards AIs resulted on this creepy video that can flip you off AI perpetually.

Subscribe

Essentially the most participating reads in blockchain. Delivered as soon as a

week.

[ad_2]

Source link